Advertisement

The release of the AI-enhancing quantum large language model marks a turning point in how machines process and respond to human language. By blending quantum computing with advanced AI, researchers have crafted a system that thinks faster, understands context more deeply, and operates with greater efficiency. This isn’t just an incremental improvement — it’s a thoughtful reimagining of what language models can achieve when powered by two groundbreaking technologies.

Moving beyond the limits of classical computing, the model opens up new possibilities for natural, nuanced communication between humans and machines while addressing the growing demand for sustainable, high-performance AI systems.

Large language models have come a long way, learning from enormous datasets and running on massive networks of classical processors. Each new generation becomes smarter and more capable, but this progress has come at a steep cost — training and running these models demands immense power and resources. The AI-enhancing quantum large language model offers a smarter path forward by shifting part of the workload to quantum circuits. Unlike traditional bits, qubits can exist in multiple states at once, letting them explore patterns and connections in data far more efficiently. This means the model can tackle certain complex tasks more quickly while using less energy than conventional systems.

What sets this model apart is how seamlessly it blends classical and quantum computing to solve one of AI’s biggest hurdles: maintaining accuracy without sacrificing speed or scalability. Its quantum components excel at fine-tuning probabilities and predicting sequences, which tend to trip up classical models as language becomes more subtle and context-heavy. By leaning on quantum power, the model handles nuanced expressions, regional slang, and ambiguous phrasing with a more natural and grounded touch, making its responses feel refreshingly authentic.

At the core of this launch is the idea that quantum computing can augment AI rather than replace it. Quantum-enhanced machine learning has been a subject of research for over a decade, but this marks the first time it has been deployed at scale in a production-grade language model. The quantum circuits in the model are particularly suited for optimization and sampling tasks, which are central to how language models choose the next word in a sequence or adjust weights during training.

By introducing quantum-based optimization layers, the model can explore a much broader solution space without consuming excessive memory or processing power. This feature is especially helpful in capturing long-range dependencies in text, a task that traditional models often struggle with when sequences are very long. Moreover, the quantum layer introduces an element of stochastic behavior that prevents the model from becoming stuck in repetitive or overly cautious patterns, making its output feel more natural and less formulaic.

Another advantage is energy efficiency. Classical supercomputers running advanced language models can draw enormous amounts of electricity. Early benchmarks of this AI-enhancing quantum large language model show a noticeable reduction in power usage for comparable workloads. While the savings are still modest, they point toward a more sustainable path for scaling AI systems in the future.

This launch has broad implications for multiple areas of society. In research, the quantum-enhanced AI can analyze scientific papers, generate hypotheses, and even help design experiments with a greater sensitivity to subtle patterns in data. This could accelerate progress in fields such as medicine, climate science, and engineering.

For businesses, the model offers enhanced performance in natural language tasks, including customer service, document analysis, and market prediction. The ability to process complex, context-rich queries with greater speed and nuance could lead to better user experiences and more insightful analytics. Enterprises that previously found the cost of running large-scale language models prohibitive may benefit from the energy and efficiency gains offered by this hybrid approach.

On a more personal level, consumers may encounter this technology in chat assistants, translation services, educational tools, and accessibility applications. The enhanced comprehension and more context-aware responses could make interactions feel less robotic and more like a genuine exchange. Particularly for people who rely on assistive technologies for communication, the added subtlety in language understanding can improve usability and comfort.

While the launch marks an impressive achievement, the integration of quantum computing with AI is still in its early days. The current model uses a small number of qubits, and while they already deliver measurable benefits, the full potential of quantum-enhanced AI will depend on progress in quantum hardware. Scalability remains a challenge, as today’s quantum processors are limited in size and prone to errors.

Researchers are optimistic, though. The modular design of the AI-enhancing quantum large language model means that as quantum chips improve, they can be swapped into the system, increasing performance without needing to redesign the entire architecture. This makes it a future-proof approach to advancing language models.

Some experts believe that the eventual goal will be a fully hybrid AI system, where both classical and quantum processors work seamlessly across all layers of the model, each handling the tasks for which they're best suited. For now, the launch serves as a glimpse of what such collaboration can achieve, setting the stage for even greater advancements in the years to come.

The launch of the AI-enhancing quantum large language model marks a significant shift in how we develop and operate language-based AI. It combines the strengths of two distinct technologies to produce a system that is more efficient, nuanced, and environmentally friendly than its purely classical predecessors. As quantum hardware matures, the potential of this hybrid approach will continue to grow, offering improved performance and broader applications. For now, it stands as a testament to the possibilities unlocked when we look beyond traditional boundaries and bring together ideas from different fields to solve complex problems. This marks the beginning of a new chapter in AI development, one that promises to reshape our interaction with machines in subtle yet profound ways.

Advertisement

A leading humanoid robot company has introduced its next-gen home humanoid designed to assist with daily chores, offering natural interaction and seamless integration into home life

Explore what large language models (LLMs) are, how they learn, and why transformers and attention mechanisms make them powerful tools for language understanding and generation

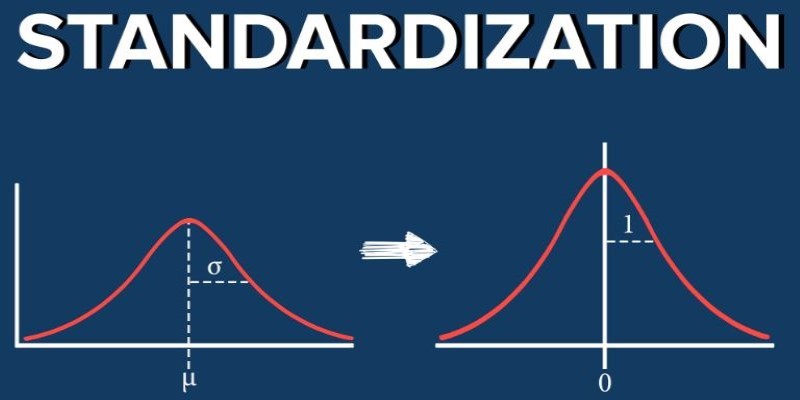

What standardization in machine learning means, how it compares to other feature scaling methods, and why it improves model performance for scale-sensitive algorithms

Learn how to use ChatGPT for customer service to improve efficiency, handle FAQs, and deliver 24/7 support at scale

Advertisement

What makes StarCoder2 and The Stack v2 different from other models? They're built with transparency, balanced performance, and practical use in mind—without hiding how they work

PaLM 2 is reshaping Bard AI with better reasoning, faster response times, multilingual support, and safer content. See how this powerful model enhances Google's AI tool

How Amazon AppFlow simplifies data integration between SaaS apps and AWS services. Learn about its benefits, ease of use, scalability, and security features

Discover the top 10 AI voice generator tools for 2025, including ElevenLabs, PlayHT, Murf.ai, and more. Compare features for video, podcasts, education, and app development

Advertisement

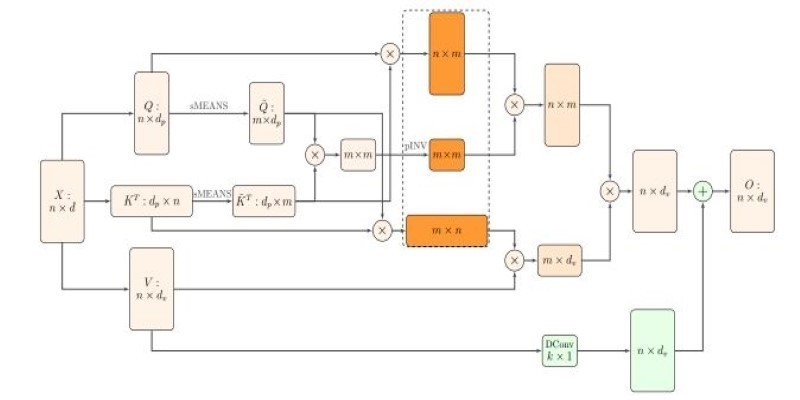

How Nyströmformer uses the Nystrmmethod to deliver efficient self-attention approximation in linear time and memory, making transformer models more scalable for long sequences

Ahead of the curve in 2025: Explore the top data management tools helping teams handle governance, quality, integration, and collaboration with less complexity

ChatGPT Search just got a major shopping upgrade—here’s what’s new and how it affects you.

How Llama Guard 4 on Hugging Face Hub is reshaping AI moderation by offering a structured, transparent, and developer-friendly model for screening prompts and outputs