Advertisement

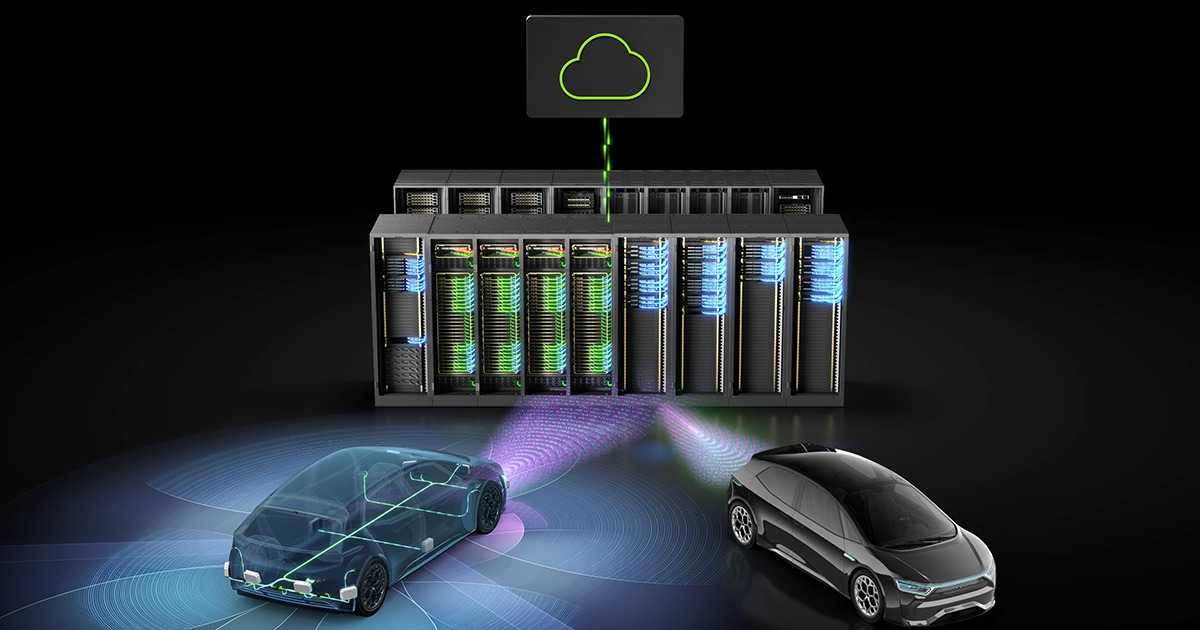

When you think of cars driving themselves, the mind often drifts to science fiction or early-stage experiments, not something that’s rolling out with serious backing. But Nvidia just put an end to that doubt. At GTC Paris, the company confirmed that its full-stack self-driving software platform has officially moved into production. That’s not a prototype or a beta—this is the real thing.

With this step, Nvidia isn’t talking in hypotheticals. The system is integrated, functional, and already heading into the hands of automakers. It's built on years of development, real-world testing, and tight collaboration with industry players. What makes this rollout more than just another press announcement is how complete the ecosystem is—and how Nvidia isn’t just pushing chips anymore.

Rather than offering bits and pieces to be bolted onto someone else’s framework, Nvidia developed an end-to-end platform. It combines the Drive OS, DriveWorks middleware, perception and mapping, and the application stack—all running on Nvidia’s Drive Orin. This is more than just sensors and AI models stitched together. The system is purpose-built from the ground up for autonomy.

Drive Orin, the hardware muscle, is a system-on-a-chip specifically designed for autonomous vehicles. It handles everything from sensor fusion to real-time decision-making. The software stack running on it covers everything from detecting traffic lights to navigating complex intersections, all while keeping the latency low and safety standards high.

And here’s something easy to overlook but crucial: the same platform that’s going into cars is also used for simulation and training. That makes updates smoother, testing more realistic, and development cycles faster. Developers aren’t working in a lab with one version and deploying another to vehicles on the road. It’s all aligned.

Nvidia isn't crossing its fingers and hoping car companies pick up the tech. They've already signed on. In fact, the vehicles equipped with this stack are either already in production or set to enter production soon. This includes models from established manufacturers who are known for taking their time before moving tech into production. That means the validation has been done, the testing is complete, and regulatory boxes have been checked.

During the GTC Paris update, it became clear that Nvidia isn’t acting alone. Their ecosystem includes partnerships with tier-one suppliers, mapping firms, sensor manufacturers, and tool developers. Everyone’s working on the same baseline, which smooths out integration headaches and speeds up the overall rollout.

This kind of commitment only happens when a technology is ready to scale. Not “maybe next year.” Not “once regulations catch up.” It’s happening now. What also stands out is how global the rollout strategy has become. Nvidia’s partners aren’t clustered in one region—they span across North America, Europe, and Asia, making the platform adaptable to different driving laws, road behaviors, and market demands. This level of coordination isn’t just about distribution; it’s about tailoring the tech to meet regional compliance while maintaining a unified software backbone.

One of the standouts of the system is its dynamic adaptability. Instead of being locked into a set pattern or relying on narrow AI models trained for specific environments, the platform continuously learns and updates.

This is where Nvidia's work in generative AI and foundation models comes into play. The driving models are trained on massive, diverse datasets using Nvidia DGX infrastructure and refined via simulation using Drive Sim on Omniverse. That kind of setup allows the AI to be trained on rare scenarios without waiting for them to happen on the road. A pedestrian in a costume darting into the street? It's already seen that. Sudden lane block with zero warning? Covered.

The important bit is that these aren’t just demo scenarios. They’re stress-tested, backed by simulation, and integrated back into the live software stack—then pushed as updates in a controlled, safe manner. With regulatory approval frameworks in place, these updates aren't guesswork. It’s a controlled evolution.

It’s easy to focus only on what happens in the vehicle, but the Nvidia platform stretches well beyond that. The development environment around it—training tools, simulation software, hardware testing rigs, and data management platforms—forms an ecosystem built to reduce friction at every step.

Drive Sim on Omniverse, for example, allows teams to simulate entire cities with real-time lighting, weather, and traffic systems. The model interacts with virtual pedestrians, cyclists, road signs, and even construction zones. Engineers can simulate millions of miles in a day, with exact reproducibility and metrics tracking.

And here’s where it starts getting very real for automakers: what used to take weeks to test can now be done in hours. What used to be expensive, complex data collection can now be validated in a loop. The barrier to entry is lower, but the quality bar stays high.

It also plays nicely with existing systems. Nvidia isn’t demanding a complete overhaul of what’s already in place. The platform is modular, which means manufacturers can integrate parts of it gradually or go full stack if they’re building something from scratch.

The announcement at GTC Paris wasn’t just about new tech specs or partnerships—it marked the point where Nvidia’s vision moved from concept to commercial reality. The full-stack self-driving platform is no longer a lab project. It’s in vehicles. It’s being used. It’s tested. It’s here.

And if history is any guide, this won’t be a slow rollout. With the tools Nvidia has built around development, simulation, and deployment, the adoption curve could move a lot faster than we’ve seen before. Automakers now have a tested, scalable platform in their hands. It’s not often that a company manages to align hardware, software, training tools, and real-world deployment all under one roof. But that’s exactly what Nvidia has done.

Advertisement

Learn how to use ChatGPT for customer service to improve efficiency, handle FAQs, and deliver 24/7 support at scale

Curious about ChatGPT jailbreaks? Learn how prompt injection works, why users attempt these hacks, and the risks involved in bypassing AI restrictions

Discover the top 10 AI voice generator tools for 2025, including ElevenLabs, PlayHT, Murf.ai, and more. Compare features for video, podcasts, education, and app development

What data lakes are and how they work with this step-by-step guide. Understand why data lakes are used for centralized data storage, analytics, and machine learning

Advertisement

Discover how Nvidia's latest AI platform enhances cloud GPU performance with energy-efficient computing.

Find the 10 best image-generation prompts to help you design stunning, professional, and creative business cards with ease.

DataRobot acquires AI startup Agnostiq to boost open-source and quantum computing capabilities.

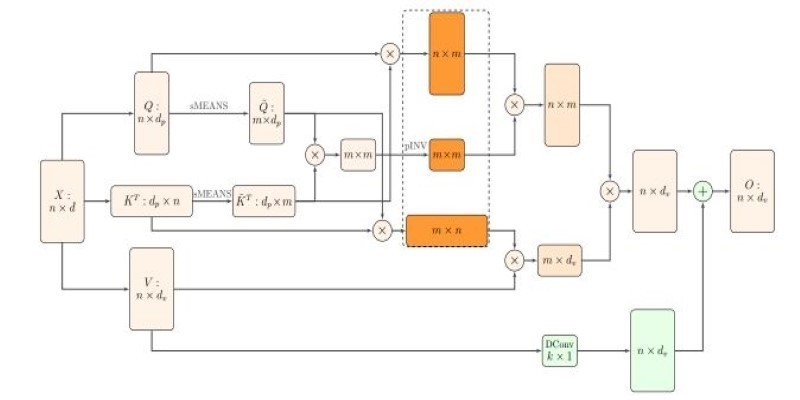

How Nyströmformer uses the Nystrmmethod to deliver efficient self-attention approximation in linear time and memory, making transformer models more scalable for long sequences

Advertisement

How Gradio reached one million users by focusing on simplicity, openness, and real-world usability. Learn what made Gradio stand out in the machine learning community

How AI is transforming traditional intranets into smarter, more intuitive platforms that support better employee experiences and improve workplace efficiency

Yamaha launches its autonomous farming division to bring smarter, more efficient solutions to agriculture. Learn how Yamaha robotics is shaping the future of farming

Learn how ZenML helps streamline EV efficiency prediction—from raw sensor data to production-ready models. Build clean, scalable pipelines that adapt to real-world driving conditions