Advertisement

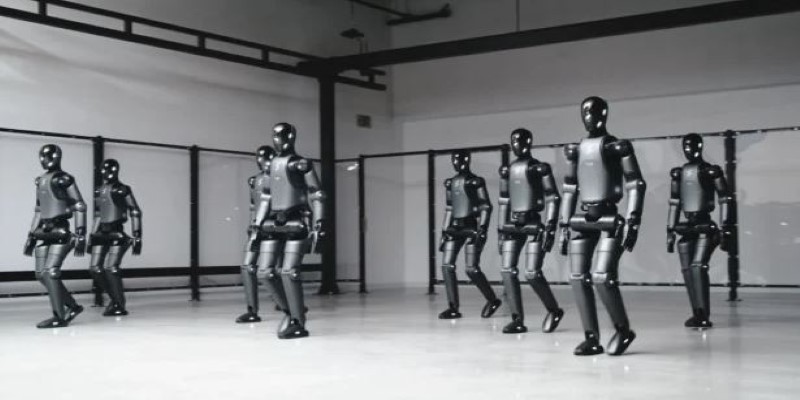

Humanoid robots have long captured people’s curiosity, but their movements often seem unnatural. They walk stiffly, struggle with balance, and quickly topple on uneven ground. Engineers have now built a humanoid robot that learns to walk like a human, not by following rigid instructions but by practicing, observing, and adjusting as it goes.

This approach brings the robot closer to how people naturally learn to walk, through experience and feedback. Rather than simply imitating the look of walking, the robot develops a sense of balance and rhythm. It marks a significant step toward machines that truly interact with us.

Walking is deceptively simple for humans, yet extremely complex for machines. Each step involves coordinated muscle activity, constant balance correction, and split-second responses to changes in surface or slope. Most humanoid robots to date have relied on rigid sequences programmed to keep them upright. These worked only on flat, predictable terrain and often failed on anything else.

The robot in this project learns to walk like a human through trial and learning. Equipped with sensors in its legs, feet, and torso, it collects feedback about its weight, angles, and ground contact. Engineers fed it hours of video footage showing real people walking in different environments. The robot began to imitate this behavior, making mistakes, falling, adjusting its stance, and improving over thousands of repetitions. Over time, it developed the ability to make subtle changes humans take for granted — shortening its stride when descending, leaning forward uphill, or compensating when a foot slips. Rather than following a single pre-written pattern, it now moves in response to what it feels in the moment, much like a person does.

This breakthrough relies heavily on machine learning. Instead of receiving explicit walking instructions, the robot’s control system is based on a neural network trained with real-world walking data. Engineers gathered extensive motion capture recordings of people walking under varied conditions — on grass, stairs, uneven ground, and while carrying loads. These recordings created a vast library of human walking behavior.

During training, the robot's early attempts were awkward and unsteady. It would freeze, misstep, or fall over while it figured out what to do next. Each failure was recorded as data to improve the model. After enough practice, its movements smoothed out, becoming more balanced and more like the human examples it had studied. A key advantage of this learning method is that it prepares the robot for unpredictable situations. Earlier robots often failed when something unexpected happened, such as a bump or a loose surface. This new robot can adjust mid-step, recover its balance, and keep going. It doesn't need a perfectly smooth path to function, which makes it far more practical for real-world use.

One of the biggest hurdles in creating human-like walking is maintaining balance. Humans have evolved over thousands of years to stand and walk on two legs, using flexible muscles and reflexes. Robots are heavier, with hard joints and motors instead of muscles, which makes balance more difficult.

To address this, engineers redesigned the robot's body to make it more suitable for dynamic walking. Its joints have a slight flexibility, allowing them to absorb impacts and adapt to uneven surfaces. The feet are segmented and slightly curved, which helps them conform to the ground rather than slipping or rocking. Another innovation was giving the robot a flexible "spine" that bends slightly forward, backward, and to the side to keep its weight centered. These mechanical improvements work in conjunction with the learning software, making every step more stable. This combination allows the robot to handle gentle slopes, uneven paths, and minor obstacles without collapsing, something earlier models could not achieve.

There is a good reason to teach robots to walk like humans, rather than simply having them move in any way they can. Many spaces that robots are meant to enter — homes, hospitals, disaster zones — are built for human mobility. Stairs, narrow hallways, and cluttered floors pose challenges for wheels or four-legged machines but are easily navigated by people. A robot that walks in the same way can use the same spaces without needing any changes to the environment.

Natural walking also makes robots easier for people to interact with. Machines that move in a way we recognize feel safer and more predictable to be around. People can walk beside them or around them without fear of sudden, strange movements. This familiarity is especially valuable in settings where robots and people will share the same work area or living space.

The success of this humanoid robot learning to walk like a human signals a meaningful advance. Service robots, rescue robots, and personal assistance machines can benefit from this technology, making them more useful and more accepted in daily life. It demonstrates how learning and adaptation can replace rigid instructions, helping robots move through the world on our terms.

Humanoid robots are still far from matching human agility or endurance, but this development brings them closer to that goal. By combining machine learning with improved mechanical design, this robot achieves a more fluid and adaptable way of walking. Rather than simply moving forward, it responds to its environment and keeps its balance in ways that feel almost natural. Teaching a humanoid robot to walk like a human is more than a technical challenge. It shows how machines can learn through practice, adjust to their surroundings, and behave in ways people intuitively understand. Seeing the robot take steady, human-like steps after so much training reflects both progress and potential — a quiet reminder that technology continues to find ways to walk alongside us, step by step.

Advertisement

Learn how ZenML helps streamline EV efficiency prediction—from raw sensor data to production-ready models. Build clean, scalable pipelines that adapt to real-world driving conditions

Yamaha launches its autonomous farming division to bring smarter, more efficient solutions to agriculture. Learn how Yamaha robotics is shaping the future of farming

Explore what large language models (LLMs) are, how they learn, and why transformers and attention mechanisms make them powerful tools for language understanding and generation

Find how AI is reshaping ROI. Explore seven powerful ways to boost your investment strategy and achieve smarter returns.

Advertisement

Running large language models at scale doesn’t have to break the bank. Hugging Face’s TGI on AWS Inferentia2 delivers faster, cheaper, and smarter inference for production-ready AI

How AI is transforming traditional intranets into smarter, more intuitive platforms that support better employee experiences and improve workplace efficiency

Open models give freedom—but they need guardrails. Constitutional AI helps LLMs reason through behavior using written principles, not just pattern-matching or rigid filters

Improve your skills (both technical and non-technical) and build cool projects to set yourself apart in this crowded job market

Advertisement

Discover the top 10 AI voice generator tools for 2025, including ElevenLabs, PlayHT, Murf.ai, and more. Compare features for video, podcasts, education, and app development

A leading humanoid robot company has introduced its next-gen home humanoid designed to assist with daily chores, offering natural interaction and seamless integration into home life

Find the 10 best image-generation prompts to help you design stunning, professional, and creative business cards with ease.

EY introduced its Nvidia AI-powered contract analysis at Mobile World Congress, showcasing how advanced AI and GPU technology transform contract review with speed, accuracy, and insight