Advertisement

Most people talk about AI as if it's a black box—smart, mysterious, and ready to spit out answers. But when you ask how it makes those decisions or whether those decisions are safe, things get murky. That's where Constitutional AI comes in. It's a way to make large language models (LLMs) more aligned with human intentions but without just hard-coding rules. Instead, the model is trained to follow a set of principles.

This becomes even more interesting when applied to open LLMs. Open models bring transparency, flexibility, and customization, but that openness also means responsibility. Anyone can take the model, tweak it, and deploy it. So, the question is—how do you make sure it behaves well no matter who's using it? That’s the role Constitutional AI is trying to play.

Constitutional AI is an approach where instead of relying entirely on human feedback to teach a model how to behave, the model learns from a written “constitution” of principles. It’s like setting ground rules and then letting the model learn how to apply them.

Instead of hiring thousands of people to rate outputs and flag bad responses, Constitutional AI uses those principles as a reference. The model learns not just what to say but why it's saying it. It figures out, for example, that respecting user privacy or avoiding harmful advice isn't just about avoiding specific keywords—it's about reasoning through the intent.

This method was introduced to reduce dependence on human feedback, which is expensive and full of inconsistencies. Humans don't always agree, and even when they do, they can miss edge cases. Constitutional AI turns that around. It lets the model critique its own outputs and chooses better ones based on the constitution it’s following.

With closed-source models, the company that built the model keeps control. They set the rules, run the training, and decide who gets access. That creates guardrails but also limits customization. With open LLMs, the model is out in the wild. Anyone with enough computing can retrain it, fine-tune it, or plug it into their apps.

This flexibility makes open LLMs appealing, but it also means more variation in behavior. One group might tune a model to be extra cautious, while another might push it to be more assertive or even reckless. The results? Unpredictable.

That’s where Constitutional AI fits in. It acts as a base layer of alignment. It doesn’t depend on who fine-tunes the model later or what dataset they use. As long as the training process includes Constitutional AI, the model carries those principles forward. It doesn’t just learn from data—it learns to reason about the right thing to say.

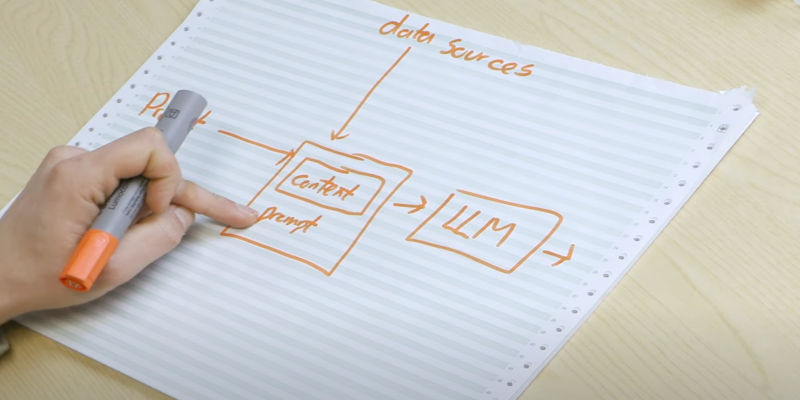

The process of applying Constitutional AI isn't just about adding a ruleset and calling it a day. There’s a training loop behind it. Here’s how it generally works:

The first step is defining the principles. These aren’t just vague ideas like “be nice.” They need to be clear, interpretable, and applicable across many situations. For example:

The list isn’t exhaustive, and different teams can write different constitutions depending on the use case. What matters is consistency and coverage.

Once the constitution is ready, the model generates responses to various prompts—without any filtering. This gives a range of answers, from ideal to questionable. These outputs form the raw material for the next stage.

Here’s the twist—another model, or the same one, reads the responses and critiques them using the written constitution. This isn't human moderation; it’s automated. The model explains why one answer might be better than another, citing the rules it’s supposed to follow.

For example, if one output is sarcastic in a sensitive context, the model might flag it for being emotionally dismissive, pointing back to a clause like “treat all topics involving mental health with care.”

Now comes fine-tuning. From the set of outputs and critiques, the best responses, according to the model's reasoning, are used as training data. The model learns to produce similar outputs going forward, aligning itself more closely with the constitution.

This loop repeats with new prompts and new critiques, gradually improving the model’s behavior. Since the process relies on the model learning to reason from principles, it scales better than depending on human feedback alone.

In an open setting, safety and consistency can’t be left to chance. There’s no central authority checking every version of the model once it's released. That’s why building Constitutional AI into the foundation is so important. It creates models that don’t just repeat training data—they reflect on the values they’re meant to uphold.

When someone fine-tunes a model for a specific task—say, tutoring or customer service—the foundational behavior is still influenced by the constitution. So even if the application changes, the core ethics stay intact.

Another upside is explainability. If a model says, “I can’t answer that,” it’s often unclear why. But with Constitutional AI, the model might explain, “Answering this question could spread misinformation, which goes against my design principles.” That kind of transparency matters, especially in high-stakes use cases.

Open LLMs are here to stay, and their use will only grow. But with openness comes the need for responsibility, and that’s where Constitutional AI plays a quiet but important role. It gives models a way to reason with guidelines instead of reacting blindly to examples. And for those building with open-source models, it’s a step toward safer, more consistent AI—without losing flexibility.

The promise isn’t that models will be perfect. But with the right foundation, they can at least know how to aim in the right direction.

Advertisement

Discover how Nvidia's latest AI platform enhances cloud GPU performance with energy-efficient computing.

Bias in generative AI starts with the data and carries through to training and outputs. Here's how teams audit, adjust, and monitor systems to make them more fair and accurate

Learn how ZenML helps streamline EV efficiency prediction—from raw sensor data to production-ready models. Build clean, scalable pipelines that adapt to real-world driving conditions

A leading humanoid robot company has introduced its next-gen home humanoid designed to assist with daily chores, offering natural interaction and seamless integration into home life

Advertisement

Learn the top 8 Claude AI prompts designed to help business coaches and consultants boost productivity and client results.

Find how AI is reshaping ROI. Explore seven powerful ways to boost your investment strategy and achieve smarter returns.

Running large language models at scale doesn’t have to break the bank. Hugging Face’s TGI on AWS Inferentia2 delivers faster, cheaper, and smarter inference for production-ready AI

How Amazon AppFlow simplifies data integration between SaaS apps and AWS services. Learn about its benefits, ease of use, scalability, and security features

Advertisement

How AI is transforming traditional intranets into smarter, more intuitive platforms that support better employee experiences and improve workplace efficiency

How Amazon S3 works, its storage classes, features, and benefits. Discover why this cloud storage solution is trusted for secure, scalable data management

Discover the top 10 AI voice generator tools for 2025, including ElevenLabs, PlayHT, Murf.ai, and more. Compare features for video, podcasts, education, and app development

AI in Cars is transforming how we drive, from self-parking systems to predictive maintenance. Explore how automotive AI enhances safety, efficiency, and comfort while shaping the next generation of intelligent vehicles