Advertisement

Microsoft has started enforcing stricter controls on how its Copilot AI tools are used, aiming to stop them from being exploited for harmful purposes. The company has introduced stronger safeguards, clearer rules, and updated guidance so that Copilot remains a helpful assistant rather than a risk. The rapid spread of generative AI into workplaces and classrooms has created opportunities, but also sparked concerns about its potential misuse. Microsoft's latest measures are meant to reassure users, discourage bad actors, and keep the technology aligned with safe and productive outcomes, even as it becomes more advanced and widely adopted.

Generative AI has changed how people work, write, and build software. Microsoft Copilot, which integrates into Office applications, Windows, and developer tools, helps users compose documents, write formulas, draft presentations, and even assist with coding. It's one of the most widely used AI assistants, valued for saving time and reducing repetitive work. Yet, as more people began experimenting with its capabilities, it became clear that some prompts could lead to unintended and risky outputs.

Investigations have shown that Copilot can sometimes be manipulated into writing malware, phishing campaigns, or false information. While it was never designed for such purposes, loopholes and creative prompting exposed gaps in its safeguards. Even small-scale abuse raised alarm, since automated tools can multiply harm quickly and cheaply. Microsoft has responded by closing those gaps. The company has acknowledged that guardrails have to evolve alongside how people actually use — and try to misuse — the product. Leaving that unchecked risked eroding trust among both businesses and individual users.

Growing pressure from regulators and the public has also influenced Microsoft's decision. Governments in Europe and North America have started drafting policies to make tech companies more accountable for the harmful consequences of AI misuse. By acting early, Microsoft shows that it takes those responsibilities seriously and can lead rather than follow when it comes to safety and ethics.

The new approach combines updated technology, clear policy changes, and ongoing education. On the technology side, Microsoft has improved Copilot’s internal filters to detect when a prompt might be malicious. These filters look beyond simple keywords and evaluate the broader context of the request. If someone tries to get Copilot to generate ransomware, craft deceptive emails, or assist in fraud, the system is more likely to decline. Microsoft’s engineers regularly update these filters based on new patterns of misuse observed in the wild.

Policy changes now make it explicit what is and isn’t allowed. Microsoft updated its terms of service to directly prohibit using Copilot for harmful, illegal, or deceptive activities. The company has committed to enforcing these terms by restricting or suspending accounts that break them. This makes it harder for someone to claim ignorance about the rules or operate in a gray area.

Education plays a part, too. Copilot has begun displaying messages reminding users about safe use if it detects questionable inputs. These reminders don’t just block an action but explain why it’s inappropriate, encouraging people to stay within acceptable boundaries. Microsoft also launched a reporting process that allows users to flag misuse they come across. This feedback helps the company adjust its filters faster and more effectively.

Taken together, these measures aim to create a system that feels just as seamless for regular users but is more resistant to manipulation by those looking to cause harm.

For most users, these changes will hardly be noticeable. People who use Copilot for ordinary tasks — drafting content, organizing information, generating summaries, or writing code — won’t experience interruptions. The system still performs those functions reliably. But anyone trying to push the AI toward malicious output will have a very different experience. Prompts that once produced harmful scripts, fake identities, or abusive messages are now intercepted more often.

Some developers and researchers testing AI limits may notice increased sensitivity, with the system occasionally rejecting borderline prompts that could be valid in controlled research contexts. Microsoft acknowledges there’s a fine line between over-blocking and under-protecting and says it will refine that balance based on feedback.

Businesses using Copilot across organizations benefit from stronger safeguards, reducing the risk of employees misusing it and exposing the company to liability. Employers can feel more confident that protections make Copilot safer to use across teams without constant oversight.

At the same time, the changes remind users that Copilot, like any AI tool, isn’t completely foolproof. Personal responsibility still applies, and the AI should remain a helper rather than a loophole for unethical behavior.

Microsoft’s actions reflect a broader trend in the technology industry toward more accountability in AI development. As artificial intelligence becomes more capable, the risk of misuse grows. Governments, advocacy groups, and the public are asking harder questions about who is responsible when things go wrong. Microsoft has chosen to address these concerns proactively with Copilot rather than wait for regulation to force its hand.

This move also serves as a signal to competitors and partners that safety is becoming a competitive differentiator in AI. By showing that it can balance utility with responsibility, Microsoft hopes to build trust in its tools over the long term. It also demonstrates that keeping AI aligned with positive purposes requires constant vigilance, not just one-time safeguards.

There are still challenges ahead. Critics argue that technical filters can be bypassed and that bad actors will find ways to adapt. Others worry about overreach — that too much filtering could limit legitimate research or creativity. Microsoft acknowledges that no system is perfect and sees this crackdown as a work in progress rather than a final solution.

Microsoft’s crackdown on malicious Copilot AI use shows a practical response to real risks. By improving its technology, tightening rules, and promoting responsible AI practices, the company makes misuse harder while keeping the tool helpful for regular users. These changes highlight the need to guide artificial intelligence carefully to keep it beneficial. Microsoft’s new measures aim to ensure Copilot remains a trusted, safe assistant without compromising innovation or everyday usability.

Advertisement

Explore what large language models (LLMs) are, how they learn, and why transformers and attention mechanisms make them powerful tools for language understanding and generation

Learn how ZenML helps streamline EV efficiency prediction—from raw sensor data to production-ready models. Build clean, scalable pipelines that adapt to real-world driving conditions

How Gradio reached one million users by focusing on simplicity, openness, and real-world usability. Learn what made Gradio stand out in the machine learning community

DataRobot acquires AI startup Agnostiq to boost open-source and quantum computing capabilities.

Advertisement

How machine learning is transforming sales forecasting by reducing errors, adapting to real-time data, and helping teams make faster, more accurate decisions across industries

How AI in real estate is redefining how properties are bought, sold, and managed. Discover 10 innovative companies leading the shift through smart tools and data-driven decisions

Bias in generative AI starts with the data and carries through to training and outputs. Here's how teams audit, adjust, and monitor systems to make them more fair and accurate

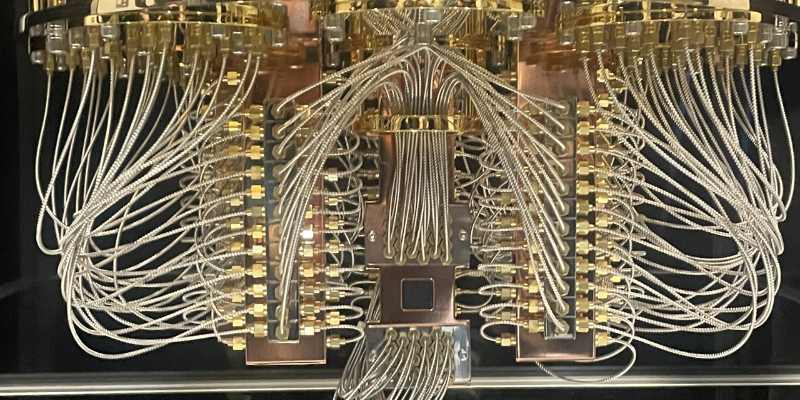

Explore the current state of quantum computing, its challenges, progress, and future impact on science, business, and technology

Advertisement

What data lakes are and how they work with this step-by-step guide. Understand why data lakes are used for centralized data storage, analytics, and machine learning

How the Bamba: Inference-Efficient Hybrid Mamba2 Model improves AI performance by reducing resource demands while maintaining high accuracy and speed using the Mamba2 framework

What happens when two tech giants team up? At Nvidia GTC 2025, IBM and Nvidia announced a partnership to make enterprise AI adoption faster, more scalable, and less chaotic. Here’s how

AI in Cars is transforming how we drive, from self-parking systems to predictive maintenance. Explore how automotive AI enhances safety, efficiency, and comfort while shaping the next generation of intelligent vehicles