Advertisement

Access to high-performance machine learning has often felt like a luxury — available mostly to large companies or well-funded research teams. The need for specialized hardware and complex setups has left many developers watching from the sidelines. But that's starting to shift. Intel and Hugging Face have announced a partnership that brings advanced machine learning acceleration within reach of more people.

By combining Intel’s hardware with Hugging Face’s accessible tools, they’re offering a path where performance doesn’t depend on deep pockets or proprietary systems. It’s a move that opens the field to wider participation and levels the playing field for AI development.

For years, the field of machine learning has leaned on specialized hardware — especially GPUs — to train and deploy models efficiently. These tools, while powerful, often come with steep costs and vendor lock-ins. Hugging Face, known for its open-access AI models and training tools, is working with Intel to change that by integrating Intel’s chips and tools, such as CPUs, Gaudi accelerators, and OneAPI, into its ecosystem.

This setup allows developers to run models using Intel’s hardware — either locally or in the cloud — without having to rewrite code for each platform. Hugging Face’s interface handles optimization in the background. Developers can get performance improvements using machines they already have or through more affordable cloud instances.

Intel’s support for a wide range of hardware lets users work within familiar environments while still gaining performance boosts. Combined with Hugging Face’s tools and community, this collaboration opens machine learning to more people beyond large enterprises and research labs.

Intel’s hardware isn’t always the first name in AI, but it remains foundational in computing. Now, the company is focusing more on AI acceleration — not by mimicking GPU makers, but by offering flexibility and broader compatibility.

Gaudi accelerators and the open OneAPI platform are central to this strategy. OneAPI lets developers write code that works across different hardware types — CPUs, GPUs, and accelerators — without being tied to one. This flexibility pairs well with Hugging Face’s goal of making AI easier to access and use.

Intel has also developed optimization tools like the OpenVINO toolkit. These tools enhance how models run, from speeding up inference to lowering energy use. When combined with Hugging Face’s Transformers library and Inference Endpoints, the result is a smoother and faster process without needing deep backend expertise.

Energy use is another angle here. Running AI models at scale is costly and not just in dollars. By optimizing workloads across hardware, Intel and Hugging Face are helping reduce energy waste — an often-overlooked part of the conversation around AI accessibility.

Hugging Face has been central in making AI easier to use. It started with natural language processing and expanded to include vision, audio, and multi-modal models. With its open approach, user-friendly APIs, and strong documentation, it has attracted a wide user base — from solo developers to large teams.

Now, with Intel integration, Hugging Face bridges another gap: software and hardware. Developers using Inference Endpoints will soon be able to deploy models backed by Intel accelerators without touching infrastructure settings. They can pick a model, click deploy, and let the platform handle the rest.

One key tool in this mix is the Optimum library, which serves as a performance link between models and hardware. The collaboration has deepened support for Intel chips through Optimum, enabling performance tuning steps like quantization and pruning with minimal effort. That used to be the domain of experienced engineers — now it’s more accessible to anyone working in AI.

Intel’s AI Suite also integrates with Hugging Face’s tools, making optimized performance easier to reach without needing new skills. This means more people can work with larger models or deploy applications on everyday machines.

It’s not just about saving time. These improvements help widen participation in AI. Someone with a standard laptop or a basic cloud server can now get close to the performance levels that were once available only with high-end, expensive setups.

This partnership shows a shift in how machine learning is built and shared. For a long time, access to good performance meant needing top-tier hardware or cloud budgets. That’s now changing.

With Intel’s broader, more cost-effective hardware stack and Hugging Face’s user-focused platform, developers from different backgrounds and resource levels can participate in AI creation more fully. Small teams, students, and organizations with limited funding can build and deploy models that meet real-world needs.

Cloud providers might also start shifting. While many offer GPU-based services at premium rates, Intel’s AI-friendly tools could lead to more affordable and still efficient options. This allows for new pricing models and more flexibility in choosing infrastructure.

The partnership also sets an example. It shows that AI performance gains don’t have to come with a steep learning curve or locked-in services. Others in the space — whether hardware makers or software platforms — may look to follow suit. Open tools that support performance without limiting freedom or increasing complexity could become the standard.

The partnership between Intel and Hugging Face marks a shift toward making machine learning more practical and accessible. By lowering the technical and financial entry points, they’re helping move AI development beyond a select group of well-funded teams. Intel’s expanding AI hardware options, paired with Hugging Face’s familiar tools, offer a smoother path for developers to build, train, and deploy models without overhauling their workflows. This kind of integration supports broader experimentation and innovation. As more developers use these tools, the field begins to reflect a wider range of perspectives and needs. That’s not just progress in performance — it’s progress in participation and inclusion.

Advertisement

Intel and Hugging Face are teaming up to make machine learning hardware acceleration more accessible. Their partnership brings performance, flexibility, and ease of use to developers at every level

How the OpenAI jobs platform is changing the hiring process through AI integration. Learn what this means for job seekers and how it may reshape the future of work

Learn how to use ChatGPT for customer service to improve efficiency, handle FAQs, and deliver 24/7 support at scale

Microsoft has introduced stronger safeguards and policies to tackle malicious Copilot AI use, ensuring the tool remains safe, reliable, and aligned with responsible AI practices

Advertisement

Looking for simple ways to export and share your ChatGPT history? These 4 tools help you save, manage, and share your conversations without hassle

Find the top eight DeepSeek AI prompts that can accelerate your branding, content creation, and digital marketing results.

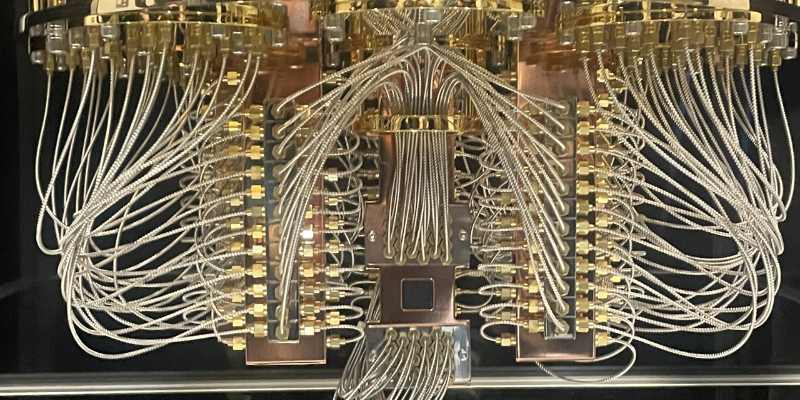

Explore the current state of quantum computing, its challenges, progress, and future impact on science, business, and technology

Learn 5 simple steps to protect your data, build trust, and ensure safe, fair AI use in today's digital world.

Advertisement

Learn the top 8 Claude AI prompts designed to help business coaches and consultants boost productivity and client results.

Curious about ChatGPT jailbreaks? Learn how prompt injection works, why users attempt these hacks, and the risks involved in bypassing AI restrictions

Is self-driving tech still a future dream? Not anymore. Nvidia’s full-stack autonomous driving platform is now officially in production—and it’s already rolling into real vehicles

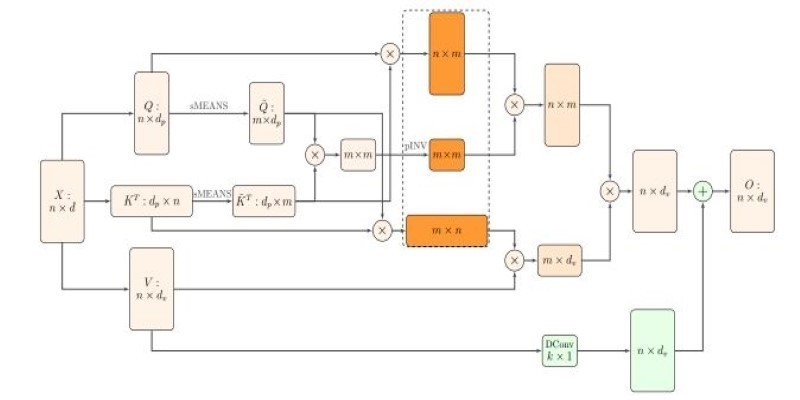

How Nyströmformer uses the Nystrmmethod to deliver efficient self-attention approximation in linear time and memory, making transformer models more scalable for long sequences