Advertisement

AI development often moves fast, but some releases feel more grounded. Llama Guard 4, now on Hugging Face Hub, stands out for that reason. It's not flashy or overloaded with hype—it’s built for something practical: helping developers manage the real-world issues that come with language models. Created by Meta, the Llama Guard series is focused on moderation and alignment.

With version 4, it's clear the goal is usable, flexible AI safety for anyone building with large language models. This release isn't just an upgrade—it signals that safety tools are becoming more integrated and accessible.

Llama Guard 4 is a moderation and safety model that screens prompts and responses for content risks. It identifies unsafe inputs like hate speech, harassment, self-harm, or other flagged categories. It works in tandem with large language models, especially the Llama 3 series, acting like a filter that catches content before it reaches users or causes harm.

Unlike simple filters, Llama Guard 4 is instruction-tuned and understands the structure of interactive AI conversations. That means it can screen user prompts and model replies, making it useful across many applications—especially in chatbots or virtual assistants where interactions flow both ways.

One of the standout features is that it works with structured safety categories defined in a JSON schema. Developers can use or tailor the default version to suit specific risk concerns. So, whether you need to flag general risks or create a custom moderation rule set, Llama Guard 4 gives you a base from which to work.

Being hosted on Hugging Face makes it easy to integrate and test. Developers can plug it into their existing pipelines without heavy lifting, making it far more approachable than building safety tools from scratch.

Llama Guard 4 categorizes content into defined risk groups and provides reasons for each decision. This makes it more transparent than older moderation systems that simply flag content without explanation. You don't just get a yes or no—you see the logic behind the call, which helps debug and build user trust.

Its JSON schema is flexible, meaning you can adjust what you want to monitor. Want to be stricter about political topics or misinformation? You can define that in the schema. This adaptability allows teams to build safety systems reflecting specific use cases or policies.

Hugging Face's ecosystem makes testing and deploying the model straightforward. With hosted APIs and Spaces, developers can experiment without deep infrastructure work. This shortens development cycles and allows smaller teams to add strong safety tools quickly.

Having access to Llama Guard 4 means less time worrying about edge cases and more time refining the core product. For teams shipping AI features, this can distinguish between a delayed launch and one that's responsibly ready.

Llama Guard 4 improves on past versions by being more context-aware. Earlier versions could detect basic violations, but version 4 is tuned for the conversational flow typical in AI interactions. That lets it catch subtler risks—things that might seem harmless on the surface but become questionable in context.

It's also lighter than you'd expect for a moderation model. That makes running on more modest hardware or within cloud budgets easier. You don't need enterprise-scale computing to put it to work. This is especially helpful for indie developers or startups that need safety tools but don't have a huge infrastructure.

Meta's documentation and benchmarks help users understand its performance. It balances sensitivity and accuracy well, reducing false positives without letting harmful content slide through. That's a tough balance to strike, but keeping things safe without frustrating users is essential.

Importantly, Meta's release under a commercial-friendly license signals its intent to be used in real-world products. Llama Guard 4 isn't just for researchers—it's for people building customer-facing tools.

AI alignment often feels abstract, but Llama Guard 4 brings it closer to everyday practice. It gives developers a clear tool for screening content and defining what’s acceptable in their AI products. You don’t have to rewrite your model or guess what could go wrong. Instead, you use a system designed to handle the common challenges and offer flexibility when new ones appear.

Its availability on Hugging Face underscores a broader move toward openness and community use. Developers anywhere can test, modify, and deploy it without much red tape. This makes good safety tools easier to apply across various projects—from educational apps to customer service bots.

Smaller teams, in particular, benefit from the accessibility. Instead of choosing between speed and responsibility, they can move quickly without skipping safety. And because the model offers clear feedback on why content is flagged, it helps build better systems over time—not just safer ones.

It's not a silver bullet, but Llama Guard 4 is useful. It shows how alignment tools can evolve from reactive to proactive, from rigid filters to adaptable frameworks. This kind of progress gives developers more control and transparency and a realistic shot at making safe AI part of everyday tools.

Llama Guard 4’s release on Hugging Face isn’t just about another model—it's a sign that AI safety is starting to meet developers where they are. Its structure, adaptability, and usability mix allow teams to add moderation without high costs or guesswork. Whether building something simple or managing large-scale interactions, this model offers a clear way to screen and shape AI output. It won't solve everything, but it's a strong step toward making responsible AI more practical—and a bit easier to build into the things people use every day.

Advertisement

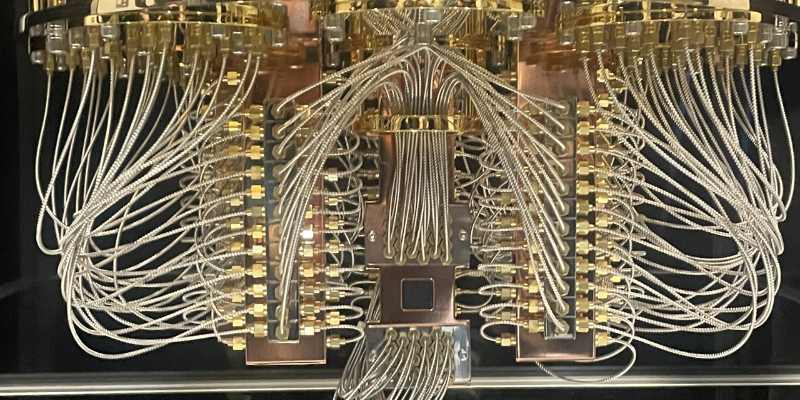

Explore the current state of quantum computing, its challenges, progress, and future impact on science, business, and technology

Learn how ZenML helps streamline EV efficiency prediction—from raw sensor data to production-ready models. Build clean, scalable pipelines that adapt to real-world driving conditions

How a humanoid robot learns to walk like a human by combining machine learning with advanced design, achieving natural balance and human-like mobility on real-world terrain

Yamaha launches its autonomous farming division to bring smarter, more efficient solutions to agriculture. Learn how Yamaha robotics is shaping the future of farming

Advertisement

What happens when ML teams stop juggling tools? Fetch moved to Hugging Face on AWS and cut development time by 30%, boosting consistency and collaboration across projects

How Amazon S3 works, its storage classes, features, and benefits. Discover why this cloud storage solution is trusted for secure, scalable data management

Improve your skills (both technical and non-technical) and build cool projects to set yourself apart in this crowded job market

What makes StarCoder2 and The Stack v2 different from other models? They're built with transparency, balanced performance, and practical use in mind—without hiding how they work

Advertisement

Is self-driving tech still a future dream? Not anymore. Nvidia’s full-stack autonomous driving platform is now officially in production—and it’s already rolling into real vehicles

How Llama Guard 4 on Hugging Face Hub is reshaping AI moderation by offering a structured, transparent, and developer-friendly model for screening prompts and outputs

PaLM 2 is reshaping Bard AI with better reasoning, faster response times, multilingual support, and safer content. See how this powerful model enhances Google's AI tool

Intel and Hugging Face are teaming up to make machine learning hardware acceleration more accessible. Their partnership brings performance, flexibility, and ease of use to developers at every level