Advertisement

When most people think of Hugging Face, they think of Transformers. And that's fair — the Transformers library has reshaped how developers approach natural language processing. But Hugging Face is more than just one tool. It's a wide ecosystem of resources, many of which don't get half the credit they deserve. Some of them are quietly doing a lot of heavy lifting, helping developers build faster, work smarter, and skip repetitive tasks.

This list isn’t about the flashiest libraries or the ones with the most GitHub stars. It’s about the lesser-known tools that quietly get things done and are worth paying attention to.

You've fine-tuned your model; it runs smoothly and spits out predictions. Now what? Evaluation is often where momentum drops. Hugging Face's evaluation library takes the friction out of that process. You can quickly test your model on popular metrics like BLEU, ROUGE, accuracy, or precision—without rewriting boilerplate code or digging up outdated GitHub scripts.

What makes it even more convenient is how it works right out of the box with datasets and models already hosted on Hugging Face. You can pass in predictions and references directly and get back clean metric scores that are ready to report or compare. It’s not fancy, but it is reliable—and reliability is everything when you’re trying to finish up an experiment before the deadline.

Most people know about Hugging Face Spaces but don't always realize how useful they can be beyond just showing off models. Spaces let you build interactive demos using Streamlit, Gradio, or plain HTML—all hosted by Hugging Face for free.

Why is this such a big deal? You don’t need to spin up a server or deal with Docker just to share something simple. If you're building a proof-of-concept or need user feedback on a feature, Spaces is a shortcut that skips infrastructure completely. It's especially helpful for those who don't want to juggle frontend code and backend deployments at the same time.

Even better, you can fork someone else’s Space and tweak it to fit your needs, which makes iteration quick and light.

When working with large datasets, downloading and processing files just to view their contents can feel like overkill. That's where the Datasets Viewer comes in. Directly from the Hugging Face Hub, you can click into a dataset and browse actual samples, complete with formatting, labels, and previews of the structure.

This might sound basic, but it's surprisingly helpful. You don’t need to load anything locally or write test scripts just to figure out what the data looks like. For messy or community-uploaded datasets, this viewer can be the difference between moving forward or wasting time on something that won’t work.

It’s especially handy if you're dealing with multilingual or multi-format datasets where assumptions about the structure could cost you time.

4. Model Cards: Not Just Documentation

Model cards often get overlooked as "just the README." But they’re a lot more than that. If you’re building anything that uses third-party models, skipping the model card is like using an API without checking the documentation.

Many model cards on Hugging Face now include full descriptions of the training data, intended use cases, risks, limitations, and sample code. If you're integrating models into production, that information helps avoid misuse or performance issues later on. It's especially helpful when multiple models seem to do the same thing, but one is trained on domain-specific data while another is general-purpose.

The better model cards even include things like evaluation scores on benchmarks and a changelog, which makes versioning easier to manage.

Want to test a model but don’t feel like installing it locally or setting up a runtime environment? Hugging Face’s Inference API lets you do exactly that. Just send a simple HTTP request, and the model does the work remotely.

This is perfect for prototypes, testing, or embedding AI into tools without heavy dependencies. You don't need to fine-tune anything. Just pick the model, send your input, and get back the output—text, image tags, audio classification, or whatever the model supports.

It’s not free for high-volume use, but for occasional requests or proof-of-concept features, it’s quick and light. And you can integrate it directly into frontends or scripts without worrying about GPU access.

AutoTrain doesn’t get enough attention for what it offers. This tool lets you fine-tune models without writing a single line of training code. You upload your dataset, configure a few parameters in the web interface, and AutoTrain handles everything from tokenization to evaluation.

It’s not just a time-saver. It lowers the entry point for people who are strong in data prep but less comfortable with PyTorch or TensorFlow. It’s also great for quick experiments when you want to compare different architectures without investing hours in setup.

For people who just want results and aren’t building custom layers or tweaks, AutoTrain is a shortcut that actually works.

While most people use the web interface to browse and manage models, the Hugging Face CLI can speed things up significantly—especially if you work inside a terminal most of the day.

You can use the CLI to log in, upload models or datasets, clone repositories, and even manage your Spaces. It helps reduce context switching between the browser and code, and it's especially helpful for CI/CD pipelines or automation.

This tool doesn’t do anything flashy. It just saves small chunks of time—and over a week of work, those add up.

Not every tool needs to be groundbreaking to be useful. Hugging Face’s strength lies in its ability to take common tasks and make them easier, faster, and more accessible. These underrated tools may not receive the spotlight, but they often carry the bulk of the workload in real projects. If you haven't used some of them yet, it might be worth taking a closer look—you’ll probably end up wondering why you didn’t use them sooner.

Advertisement

Intel and Hugging Face are teaming up to make machine learning hardware acceleration more accessible. Their partnership brings performance, flexibility, and ease of use to developers at every level

AI content detectors don’t work reliably and often mislabel human writing. Learn why these tools are flawed, how false positives happen, and what smarter alternatives look like

Ahead of the curve in 2025: Explore the top data management tools helping teams handle governance, quality, integration, and collaboration with less complexity

How the Bamba: Inference-Efficient Hybrid Mamba2 Model improves AI performance by reducing resource demands while maintaining high accuracy and speed using the Mamba2 framework

Advertisement

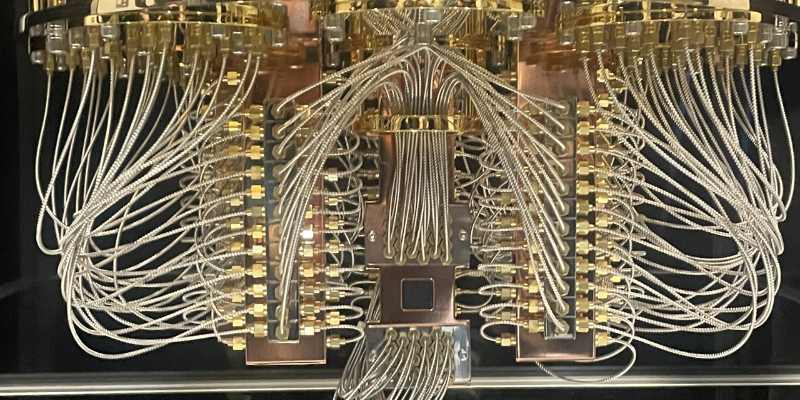

Explore the current state of quantum computing, its challenges, progress, and future impact on science, business, and technology

Discover how Nvidia's latest AI platform enhances cloud GPU performance with energy-efficient computing.

How Amazon AppFlow simplifies data integration between SaaS apps and AWS services. Learn about its benefits, ease of use, scalability, and security features

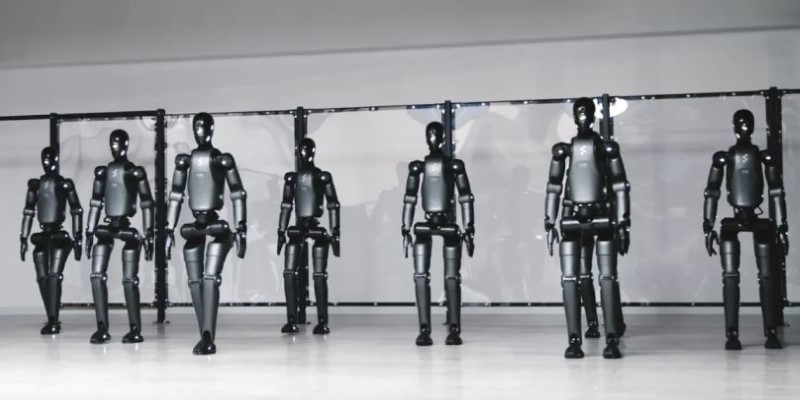

How a humanoid robot learns to walk like a human by combining machine learning with advanced design, achieving natural balance and human-like mobility on real-world terrain

Advertisement

DataRobot acquires AI startup Agnostiq to boost open-source and quantum computing capabilities.

Bias in generative AI starts with the data and carries through to training and outputs. Here's how teams audit, adjust, and monitor systems to make them more fair and accurate

How Nvidia produces China-specific AI chips to stay competitive in the Chinese market. Learn about the impact of AI chip production on global trade and technological advancements

Is self-driving tech still a future dream? Not anymore. Nvidia’s full-stack autonomous driving platform is now officially in production—and it’s already rolling into real vehicles