Advertisement

When we use generative AI—whether it's crafting text, generating images, or answering questions—we expect it to be accurate, helpful, and fair. But it doesn't always turn out that way. Sometimes, these systems reflect human biases that were never supposed to be there. That can shape answers, change tones, or shift recommendations in subtle ways. And the reality is these biases don't just slip through—they're often baked into the very data that trains the models.

Understanding where these biases come from is the first step. But fixing them? That’s where things get more serious. You can’t just flip a switch and expect an AI system to become neutral. It takes a structured, ongoing process—one that combines clear objectives, transparent methods, and regular evaluation.

Bias in AI isn’t always loud. It doesn’t always shout from the results. Sometimes, it’s a whisper in the phrasing. Sometimes, it’s in the absence of certain perspectives. And sometimes, it's in how much attention one kind of data gets over another.

Imagine asking a generative model to describe a doctor. If the model always defaults to a male doctor and a female nurse, that’s bias. Or if a system’s image generator produces lighter-skinned individuals when prompted with the word “professional,” that’s bias, too.

These patterns don’t happen randomly. They come from the data—books, articles, websites, and user interactions that the AI has seen. The problem is that most of that content reflects real-world inequalities, stereotypes, and unbalanced perspectives. When you feed that into a model without scrutiny, you're basically handing it a map of how those biases are distributed—and telling it to follow the route.

Generative AI relies on large datasets scraped from the internet, research archives, books, and more. These sources aren’t curated for fairness. They’re gathered at scale. And because of that, some voices get amplified while others are barely heard.

For instance, if an English-language dataset includes 85% of content from U.S.-based sources, you'll get a model that leans heavily toward U.S. cultural norms. Or if tech-heavy forums dominate the training data, the AI may echo certain political or gender biases more commonly found there.

Then there’s the labeling problem. When models are trained using labeled data—for example, marking text as “toxic” or “non-toxic”—the labels themselves are often subjective. What one group calls “offensive,” another might see as a fair opinion. These decisions feed directly into the way AI systems learn to generate or filter responses.

Fixing bias doesn’t mean deleting it at the surface level. It means changing how systems are built, trained, and tested. Here's a breakdown of how teams go about doing that.

Before a model is trained, the data it will learn from should be reviewed. This is not just about checking size or format. It’s about checking for gaps and skews.

Developers use statistical tools to spot imbalances, like how often certain words or demographics appear in association with specific roles or traits. The aim is to catch these patterns before the AI absorbs them.

Once the bias is identified, the next step is to fix the imbalance. This might mean adding more diverse content—such as text from underrepresented regions or authors—or removing certain types of skewed data altogether.

Some teams create synthetic examples to correct gaps, like adding more stories written by or about minority groups. But it has to be done carefully. Injecting synthetic data that doesn’t reflect real human experiences won’t help. If anything, it might make the system more detached.

Even with a cleaner dataset, how the model is trained still matters. This is where bias mitigation techniques like reweighting, adversarial training, and regularization come in.

These methods act like guardrails. They don’t eliminate bias entirely, but they reduce the chances that it becomes the dominant tone in the system’s output.

After training, the model needs to be tested—not just for performance, but for fairness. This is often where overlooked biases show up.

Evaluation often includes both automated tools and human reviewers. Automated checks look for red flags in word associations, frequency counts, and sentiment scores. Human reviewers assess nuance: how something feels, not just what it says.

Even with all these precautions, bias isn’t something you solve once. It’s something you monitor. Generative models continue to interact with users and the world. That means they’re constantly exposed to new information, some of which may reintroduce old biases or bring new ones.

Many organizations now run post-deployment monitoring, where they track how the AI responds to real-world prompts. Feedback tools allow users to flag responses that seem skewed. Scheduled reviews help teams decide when a model needs retraining or fine-tuning.

Some systems even use reinforcement learning from human feedback (RLHF), which lets models learn from corrections. But that process has its own challenges. If the feedback isn’t balanced, the AI might start to reflect the preferences of the loudest users instead of the most accurate or fair.

Bias in generative AI isn’t just a technical issue. It’s tied to how we communicate, what we value, and who gets heard. These systems don’t invent biases—they reflect the world we’ve built. But if we want AI that treats people fairly, the responsibility lies with those building and maintaining these tools.

The process of bias mitigation requires clear goals, steady review, and a willingness to adapt. It’s not a checkbox, and it’s not a one-time fix. But it’s how we keep AI grounded in accuracy and fairness—rather than just efficiency.

Advertisement

Explore the concept of LeRobot Community Datasets and how this ambitious project aims to become the “ImageNet” of robotics. Discover when and how a unified robotics dataset could transform the field

Microsoft has introduced stronger safeguards and policies to tackle malicious Copilot AI use, ensuring the tool remains safe, reliable, and aligned with responsible AI practices

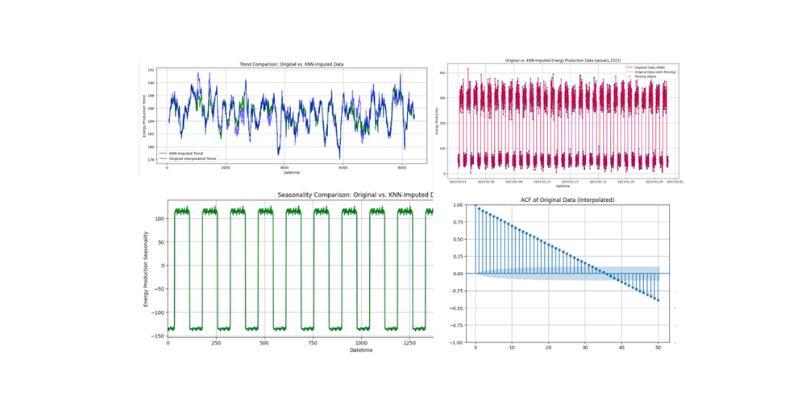

Discover effective machine learning techniques to handle missing data in time-series, improving predictions and model reliability

ChatGPT Search just got a major shopping upgrade—here’s what’s new and how it affects you.

Advertisement

How the AI-enhancing quantum large language model combines artificial intelligence with quantum computing to deliver smarter, faster, and more efficient language understanding. Learn what this breakthrough means for the future of AI

Yamaha launches its autonomous farming division to bring smarter, more efficient solutions to agriculture. Learn how Yamaha robotics is shaping the future of farming

What standardization in machine learning means, how it compares to other feature scaling methods, and why it improves model performance for scale-sensitive algorithms

Bias in generative AI starts with the data and carries through to training and outputs. Here's how teams audit, adjust, and monitor systems to make them more fair and accurate

Advertisement

How 8-bit matrix multiplication helps scale transformer models efficiently using Hugging Face Transformers, Accelerate, and bitsandbytes, while reducing memory and compute needs

Improve your skills (both technical and non-technical) and build cool projects to set yourself apart in this crowded job market

Is self-driving tech still a future dream? Not anymore. Nvidia’s full-stack autonomous driving platform is now officially in production—and it’s already rolling into real vehicles

Explore what large language models (LLMs) are, how they learn, and why transformers and attention mechanisms make them powerful tools for language understanding and generation