Advertisement

The arrival of PaLM 2 marked a shift in how Google approaches AI language modeling. While Bard was already in the race to compete with other chatbots, the integration of PaLM 2 has given it a needed boost. Google’s Bard wasn’t exactly slow, but it lacked the punch users expected compared to others in the space.

PaLM 2 changes that. It's smaller than its predecessor but more efficient and trained on diverse data across many languages, math, and reasoning tasks. This article breaks down how exactly PaLM 2 helps Bard become faster, sharper, and more helpful.

PaLM 2 gives Bard a more refined approach to logic and reasoning. One area where early versions of Bard fell short was in multi-step thinking. It could answer factual questions, but struggled with reasoning through problems like math puzzles, logical chains, or abstract scenarios. PaLM 2’s training focused heavily on advanced reasoning benchmarks, meaning it’s now far better at understanding how one idea connects to another. Bard, powered by this model, doesn’t just guess—it's more likely to build a response step by step, especially for problem-solving or technical explanations.

In practice, this makes Bard more useful for anything involving layers of logic. Whether a user is writing a function, analyzing a hypothetical scenario, or comparing two contrasting philosophies, PaLM 2 allows Bard to hold the thread and keep the logic intact through the conversation.

One of the standout improvements with PaLM 2 is its multilingual training. It was exposed to more languages during development, which means Bard can now handle questions and conversations in over a hundred languages with more natural responses. Earlier versions of Bard often struggled with fluency or accuracy when the user switched from English to another language or even asked for a bilingual response.

With PaLM 2 under the hood, Bard can now translate, summarize, or respond in languages like Japanese, Korean, Arabic, and Hindi with fewer errors. It even understands idiomatic expressions and cultural nuances better than before. That’s a leap in accessibility, allowing Bard to become more inclusive for non-English speakers and a more reliable tool for global users.

PaLM 2’s training includes a substantial amount of programming content. This is a game-changer for users who rely on Bard for coding help. Before the upgrade, Bard’s support for code was basic. It could return common snippets or explain concepts, but it often lacked context or made mistakes with syntax and logic.

Now, Bard can help write, explain, and even debug code more accurately in multiple programming languages, including Python, JavaScript, C++, and Go. The responses are less generic and more aligned with actual developer workflows. For beginners, Bard can walk through concepts clearly. For advanced users, it can help solve specific bugs or explore framework-related problems. The coding abilities now feel more like a proper assistant than a search result.

Bard now performs much better at summarizing large blocks of text, condensing them into short, meaningful takeaways without losing the core message. This is due to PaLM 2's strengthened ability to handle long-context information. Earlier versions often missed the point when tasked with summarizing, especially with longer or emotionally complex content.

With PaLM 2, Bard picks up tone, intent, and detail more precisely. If you paste an article or a research paper, Bard doesn’t just shorten it; it prioritizes what matters. For students, writers, and researchers, this makes it easier to break down heavy content into something manageable. It also helps Bard write more focused emails, abstracts, and reports that don’t ramble.

Speed and accuracy go hand in hand. One of the biggest gains Bard gets from PaLM 2 is efficiency. Because the model is smaller and more optimized, Bard can generate answers faster without sacrificing quality. It gets to the point quicker, while earlier versions often felt like they were taking the scenic route.

The reduced delay isn’t just about speed—it’s about confidence in replies. With a tighter model and better grounding in facts and logic, PaLM 2 helps Bard offer cleaner, clearer answers. This is noticeable when asking it to compare tools, provide definitions, or explain topics like science or economics. There's less fluff and more direct help.

Google emphasized safety while developing PaLM 2. The model has built-in safeguards for bias reduction, harmful content avoidance, and context checking. Earlier versions of Bard sometimes returned answers that were inappropriate, misleading, or confusing. While no model is perfect, PaLM 2 pushes Bard toward more stable and balanced interactions.

This safety layer means Bard is less likely to return content that violates platform policies or user expectations. Whether it’s responding to sensitive questions or helping with decision-making, Bard now handles these moments with more caution and less chance of error or tone misalignment.

One surprise improvement from PaLM 2 is how it handles creative writing. Bard can now write stories, poems, and narratives that feel more natural and human. Its word choice is less stiff, the tone can shift more smoothly between playful and serious, and it keeps a better flow across paragraphs.

This wasn’t a strong point in early Bard iterations. Creative writing felt robotic or repetitive. But with PaLM 2’s help, Bard can mimic various styles, structure content more intuitively, and adapt based on genre, mood, or audience. It’s not perfect, but it’s a clear improvement, especially for content creators or casual users exploring writing.

PaLM 2 has given Bard more than just an upgrade—it’s shaped it into a tool ready for real-world use. It thinks more clearly, supports more languages, writes with care, and works faster. While there’s still room to grow, the gap between Bard and its competitors has shrunk. What stands out now is how Bard feels more reliable, less like a beta product, and more like a sharp assistant you can trust. As PaLM and Bard continue to evolve, the direction is clear—smarter, safer, and more useful for everyone.

Advertisement

Tired of reinventing model workflows from scratch? Hugging Face offers tools beyond Transformers to save time and reduce boilerplate

Rockwell Automation introduced its AI-powered digital twins at Hannover Messe 2025, offering real-time, adaptive virtual models to improve manufacturing efficiency and reliability across industries

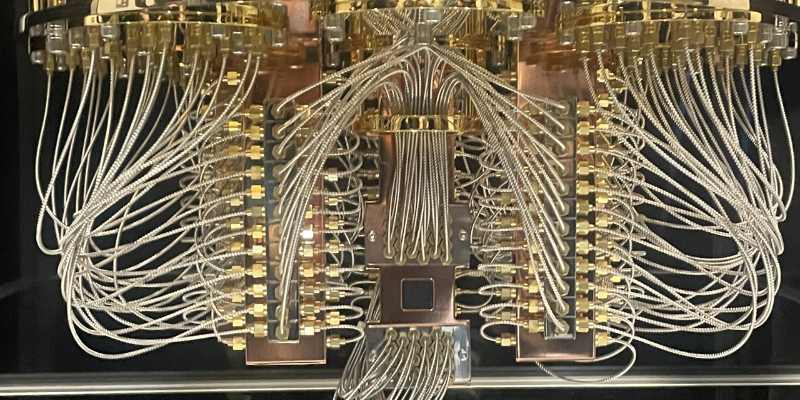

How the AI-enhancing quantum large language model combines artificial intelligence with quantum computing to deliver smarter, faster, and more efficient language understanding. Learn what this breakthrough means for the future of AI

Explore the current state of quantum computing, its challenges, progress, and future impact on science, business, and technology

Advertisement

How AI in real estate is redefining how properties are bought, sold, and managed. Discover 10 innovative companies leading the shift through smart tools and data-driven decisions

Learn how ZenML helps streamline EV efficiency prediction—from raw sensor data to production-ready models. Build clean, scalable pipelines that adapt to real-world driving conditions

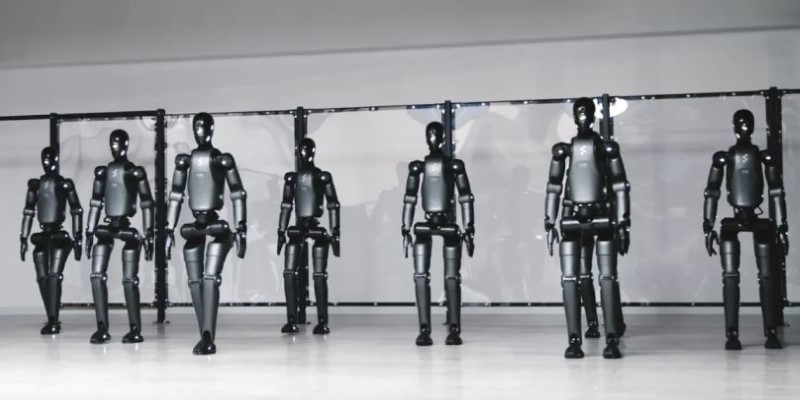

A leading humanoid robot company has introduced its next-gen home humanoid designed to assist with daily chores, offering natural interaction and seamless integration into home life

How a humanoid robot learns to walk like a human by combining machine learning with advanced design, achieving natural balance and human-like mobility on real-world terrain

Advertisement

How Nvidia produces China-specific AI chips to stay competitive in the Chinese market. Learn about the impact of AI chip production on global trade and technological advancements

What makes StarCoder2 and The Stack v2 different from other models? They're built with transparency, balanced performance, and practical use in mind—without hiding how they work

The future of robots and robotics is transforming daily life through smarter machines that think, learn, and assist. From healthcare to space exploration, robotics technology is reshaping how humans work, connect, and solve real-world challenges

Find the 10 best image-generation prompts to help you design stunning, professional, and creative business cards with ease.