Advertisement

Building a machine learning model isn’t just about choosing the right algorithm — it starts with preparing the data properly. Real-world datasets often mix features with completely different scales and units, which can confuse models and lead to poor predictions. Standardization is a straightforward yet powerful way to fix this imbalance by putting all features on an even playing field.

It doesn’t change the underlying relationships in the data, but it makes those relationships easier for models to detect. Understanding standardization in machine learning helps you get cleaner, fairer results and ensures every feature gets the attention it deserves.

Standardization in machine learning is the process of transforming each numerical feature so that it has a mean of zero and a standard deviation of one. This is done by subtracting the mean of the feature and dividing by its standard deviation. The result is that features become centered and scaled to a standard range, making them more comparable. Many algorithms rely on data being evenly scaled to perform as intended. Without standardization, features with larger ranges can dominate the learning process, overshadowing the importance of smaller-scale features.

For example, in a dataset used to predict home prices, one feature might represent the number of rooms (ranging from 1 to 10), while another represents square footage (ranging from hundreds to thousands). If left as-is, the square footage feature may disproportionately influence the model because of its higher numerical values. Standardization corrects this imbalance and ensures all features are considered on equal terms. This is especially useful for algorithms like k-nearest neighbors, support vector machines, logistic regression, and gradient-based neural networks, all of which rely on distances or gradients that are sensitive to the scale of input data.

Standardization is one of several scaling methods, but it serves a distinct purpose. It’s sometimes confused with normalization, which rescales features to fit within a fixed range, often between 0 and 1. Normalization preserves the original distribution shape while constraining the values to a limited interval. By contrast, standardization adjusts data to have statistical properties — a mean of zero and a standard deviation of one — and does not bound the data within a specific range. This makes it more appropriate when the data may have a Gaussian-like distribution but isn’t strictly limited to certain values.

Another method, min-max scaling, forces all data into a set range, which can be effective when all data points fall predictably within certain bounds. However, it is sensitive to outliers, which can stretch the range and diminish its usefulness. Standardization handles outliers better because it focuses on the central tendency and spread of the data. It’s particularly helpful when the underlying distribution is unknown or when the dataset includes extreme values. These qualities make standardization a popular choice for a wide variety of machine learning tasks where scale sensitivity is a concern.

Not every algorithm benefits from standardized data. Models based on decision trees, such as random forests and gradient boosting machines, are insensitive to feature scaling. These models split data based on feature thresholds rather than distance calculations, so standardization offers little advantage in those cases.

On the other hand, algorithms that compute distances or rely on gradient descent perform much better when input features are standardized. Clustering methods, k-nearest neighbors, and linear models can behave unpredictably without proper scaling. Standardization helps these algorithms converge faster and discover better solutions by aligning all features to the same scale. It can also reduce training time significantly by improving numerical stability during optimization.

It’s also important to apply standardization carefully. Always fit the standardization parameters — mean and standard deviation — using only the training data, then apply the same transformation to both training and test data. This prevents information from the test data from leaking into the training process, which could lead to overfitting or misleading results. Most modern machine learning libraries and pipelines include tools that make this process easier and less prone to mistakes.

Standardization offers clear advantages beyond improving model accuracy. In linear models, it makes the coefficients easier to interpret because each feature is on the same scale, allowing for meaningful comparison of their effects. It improves numerical conditioning, which makes algorithms more stable and less prone to errors during computation. It also helps avoid problems caused by features with very large or very small magnitudes, which can otherwise disrupt training.

However, standardization is not always the best or only choice. It assumes that each feature’s distribution can reasonably be summarized by its mean and standard deviation. When a feature is highly skewed or contains many outliers, standardization may not be enough to produce meaningful scales. In such cases, transformations like log-scaling, robust scaling, or even removing extreme values may improve results. Standardization can also reduce interpretability for some audiences, since transformed values lose their original units, which can make explaining results more difficult.

In practice, standardization is simple to implement and highly effective when used with algorithms that are sensitive to feature scale. Knowing when and how to apply it helps improve performance and makes model behavior more predictable and fair.

Standardization in machine learning adjusts feature values to have zero mean and unit variance, ensuring that no feature overpowers the others simply due to its scale. This allows algorithms to train more efficiently and improves their ability to uncover patterns in the data. It works best with models that rely on distances or gradients, while tree-based models usually don’t require it. By applying standardization thoughtfully, data scientists can create models that are more balanced and reliable. Although it’s not a universal solution, it remains one of the most useful preprocessing techniques when preparing data for sensitive machine learning algorithms.

Advertisement

EY introduced its Nvidia AI-powered contract analysis at Mobile World Congress, showcasing how advanced AI and GPU technology transform contract review with speed, accuracy, and insight

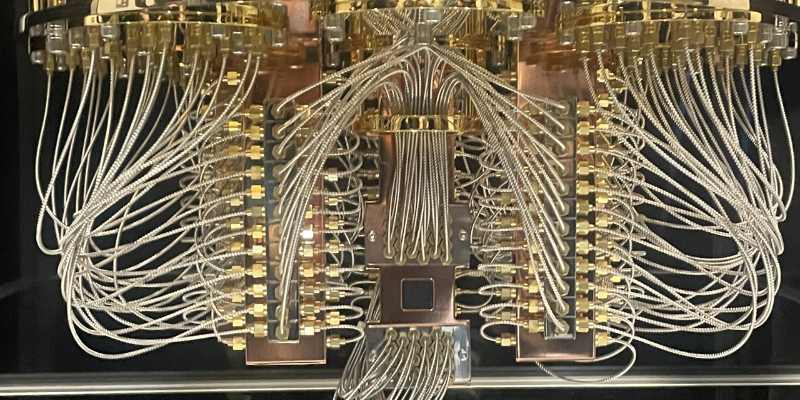

Explore the current state of quantum computing, its challenges, progress, and future impact on science, business, and technology

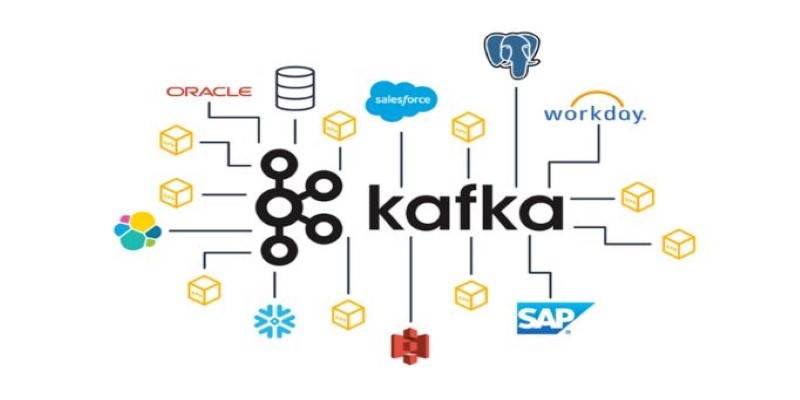

Explore Apache Kafka use cases in real-world scenarios and follow this detailed Kafka installation guide to set up your own event streaming platform

How AI is transforming traditional intranets into smarter, more intuitive platforms that support better employee experiences and improve workplace efficiency

Advertisement

What happens when two tech giants team up? At Nvidia GTC 2025, IBM and Nvidia announced a partnership to make enterprise AI adoption faster, more scalable, and less chaotic. Here’s how

AI in Cars is transforming how we drive, from self-parking systems to predictive maintenance. Explore how automotive AI enhances safety, efficiency, and comfort while shaping the next generation of intelligent vehicles

How Amazon AppFlow simplifies data integration between SaaS apps and AWS services. Learn about its benefits, ease of use, scalability, and security features

Find the 10 best image-generation prompts to help you design stunning, professional, and creative business cards with ease.

Advertisement

Discover how Nvidia's latest AI platform enhances cloud GPU performance with energy-efficient computing.

xAI, Nvidia, Microsoft, and BlackRock have formed a groundbreaking AI infrastructure partnership to meet the growing demands of artificial intelligence development and deployment

The future of robots and robotics is transforming daily life through smarter machines that think, learn, and assist. From healthcare to space exploration, robotics technology is reshaping how humans work, connect, and solve real-world challenges

Learn how ZenML helps streamline EV efficiency prediction—from raw sensor data to production-ready models. Build clean, scalable pipelines that adapt to real-world driving conditions