Advertisement

The steady rise in large language models has pushed developers to strike a balance between performance and efficiency. Many newer models can produce impressive results, but their resource demands often make them inaccessible for everyday use or smaller deployments. Bamba, a hybrid model built on the Mamba2 framework, takes a different path.

Rather than focusing only on scale or brute-force computation, Bamba prioritizes inference efficiency without compromising on capability. It’s not trying to win a race for the biggest model—it’s focused on running the smartest lap with fewer resources. This shift marks a practical move for AI models, especially where compute and memory costs are a bottleneck.

Before unpacking how Bamba works, it helps to understand what makes Mamba2 different from standard transformer models. Transformers, while powerful, are notoriously expensive to run. They process data using attention mechanisms that scale poorly as input sequences grow longer. This makes them impractical in many real-world applications unless trimmed down or heavily optimized.

Mamba2 is a newer architecture that steps away from the typical attention-based design. It’s built on selective state-space models (SSMs), which operate differently. Instead of attending to all tokens across an input sequence, SSMs focus on learning a dynamic, continuous representation of inputs over time. This drastically reduces the computational load, especially for longer sequences. Mamba2 handles sequences in a way that’s more linear in complexity, which means fewer headaches for anyone trying to deploy it at scale.

Bamba picks up from there by combining this efficient backbone with a hybrid approach that draws from both recurrent and convolutional elements. This setup lets it handle both temporal depth and localized detail, striking a smart balance between short-term reactivity and long-term memory. Unlike purely transformer-based setups that often struggle with tradeoffs between depth and speed, Bamba integrates ideas that keep things lean while still preserving contextual understanding.

Inference time—how fast a model can respond once trained—is a key concern for many practical applications. Whether it's customer support, document summarization, or embedded systems in hardware with tight memory limits, slow inference can make a powerful model unusable. Bamba was designed with this constraint in mind.

Because it avoids the quadratic complexity of self-attention and leans on a more linear computation model, Bamba is far faster during inference. This doesn’t just mean less waiting time for responses—it also translates into lower energy consumption and fewer infrastructure requirements. It’s particularly helpful in edge computing environments where resources are tight, but latency still matters.

Bamba’s hybrid nature also plays a role here. Its convolutional components handle local patterns quickly, while the SSM-based logic captures broader sequence dynamics without the usual overhead. This means the model doesn’t need to allocate as much memory or perform as many calculations to keep track of what’s happening across a sequence. It makes fewer passes over data and still produces strong, coherent outputs. And because its components are modular, Bamba can be adapted or scaled to fit different performance tiers without redesigning the whole architecture.

Speed is a strong selling point, but it’s not the only thing that sets Bamba apart. Its architecture makes it particularly well-suited for tasks where both context and efficiency matter. Speech recognition, document parsing, and time-series forecasting are all areas where traditional transformers either lag in performance or require extensive fine-tuning. Bamba brings better baseline efficiency to these domains, allowing models to work with less tuning and more reliability straight out of the box.

Another area where Bamba shines is in streaming data. Since its SSM foundation supports continuous input handling, it doesn’t need to wait for an entire sequence to make sense of it. This is a significant departure from standard models that rely heavily on seeing the full input before making decisions. For use cases like real-time analytics, live transcription, or dynamic control systems, this makes Bamba a strong candidate.

Its lower computational demand also means wider accessibility. Small labs, startups, or even hobbyist developers who can’t afford to train and host billion-parameter models now have an option that performs well without the hardware overhead. This helps level the field and encourages more experimentation and innovation from a broader range of contributors.

Hybrid models like Bamba hint at a larger trend in machine learning: moving away from one-size-fits-all solutions. Transformers have dominated the field for a while, but their costs often make them hard to sustain. As new needs emerge—more context, less computing, better streaming—models like Bamba are showing that there are other ways to solve these problems.

By blending ideas from different architectures, Bamba doesn't just reduce inference time. It reshapes what efficiency means in AI. It suggests that we can get better output with fewer resources, not by compromising on design but by being more deliberate about what each part of a model is doing. Instead of forcing everything through one architecture, it makes room for specialization.

There's also a sustainability argument here. As concerns grow over the environmental impact of massive model training and deployment, more efficient inference models help reduce long-term operational costs—not just in dollars but in energy usage. If AI is going to be embedded everywhere, it has to get lighter. Bamba is a step in that direction.

Bamba isn’t trying to replace the largest language models, but it’s not aiming low either. It offers a smarter, more adaptable path for developers who need speed, context, and reliability without a massive infrastructure bill. By building on the Mamba2 framework and applying a hybrid structure, Bamba manages to squeeze strong performance from a leaner, cleaner architecture. It gives us a glimpse into how future models might work—not by throwing more hardware at the problem, but by designing smarter software that uses what it has more effectively. As needs shift toward real-time applications and edge computing, models like Bamba will likely be part of the new standard.

Advertisement

Discover how Nvidia's latest AI platform enhances cloud GPU performance with energy-efficient computing.

IBM Plans $150B Technology Investment in US, focusing on semiconductors, AI, quantum computing, and workforce development to strengthen innovation and create jobs nationwide

Improve your skills (both technical and non-technical) and build cool projects to set yourself apart in this crowded job market

Microsoft has introduced stronger safeguards and policies to tackle malicious Copilot AI use, ensuring the tool remains safe, reliable, and aligned with responsible AI practices

Advertisement

Explore what large language models (LLMs) are, how they learn, and why transformers and attention mechanisms make them powerful tools for language understanding and generation

Running large language models at scale doesn’t have to break the bank. Hugging Face’s TGI on AWS Inferentia2 delivers faster, cheaper, and smarter inference for production-ready AI

Intel and Hugging Face are teaming up to make machine learning hardware acceleration more accessible. Their partnership brings performance, flexibility, and ease of use to developers at every level

Is self-driving tech still a future dream? Not anymore. Nvidia’s full-stack autonomous driving platform is now officially in production—and it’s already rolling into real vehicles

Advertisement

How AI is transforming traditional intranets into smarter, more intuitive platforms that support better employee experiences and improve workplace efficiency

What makes StarCoder2 and The Stack v2 different from other models? They're built with transparency, balanced performance, and practical use in mind—without hiding how they work

AI in Cars is transforming how we drive, from self-parking systems to predictive maintenance. Explore how automotive AI enhances safety, efficiency, and comfort while shaping the next generation of intelligent vehicles

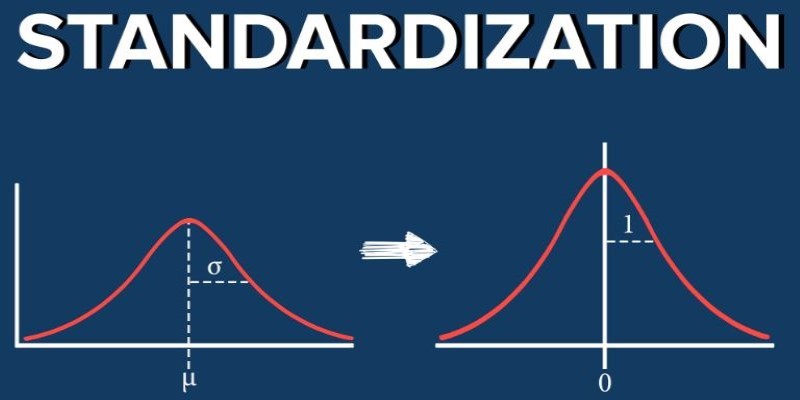

What standardization in machine learning means, how it compares to other feature scaling methods, and why it improves model performance for scale-sensitive algorithms